Interesting one today:

Occasionally, when we restore databases from backups, we need to fix orphan users. Recently, a few years ago, we discussed about fixing orphan users using script. Here we’ll look at a scenario, where the regular orphan users script will not work.

Error Message:

Msg 15600, Level 15, State 1, Procedure sp_change_users_login, Line 214 [Batch Start Line 61]

An invalid parameter or option was specified for procedure ‘sys.sp_change_users_login’.

The script “EXEC sp_change_users_login 'Auto_Fix', 'user'” only works for Sql Authentication based logins and not for Windows-level principals or non-login based users. See MSDN article for more details.

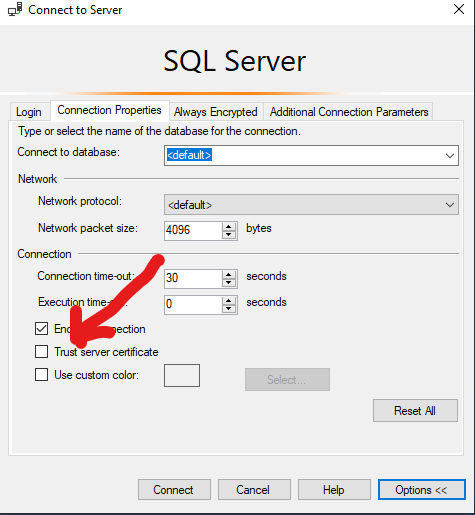

A user without login would look like this, in SSMS:

In such scenarios,

- sp_change_users_login cannot be used to map database users to Windows-level principals, certificates, or asymmetric keys.

- sp_change_users_login cannot be used with a SQL Server login created from a Windows principal or with a user created by using CREATE USER WITHOUT LOGIN.